… [Trackback]

[…] There you will find 70033 additional Information to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

What is a Tensor Processing Unit (TPU)? So, for sure, you will know what a CPU is – the main processor of each device. Intel, AMD, and Qualcomm have produced such chips for your devices for a really long time. Here, you can check out some of the most famous CPUs of all time.

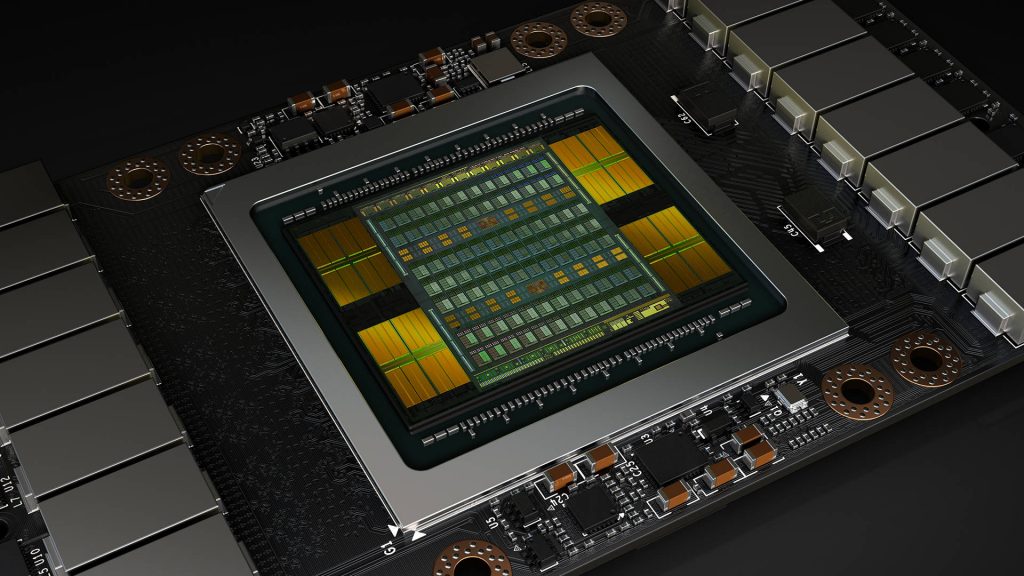

GPU is probably a term that you know too. A GPU is the Graphical Processing Unit that helps you display 3D video games on your device, and also serves to train AI.

But have you ever heard of a TPU?

TPU stands for Tensor Processing Unit, and it is a type of application-specific integrated circuit (ASIC), created by Google. ASIC devices became popular during the cryptocurrency boom. These processors are highly specific, made for a very small range of purposes like matrix multiplications, and tensor operations. Contrary to CPUs and GPUs, which are created for general purposes, the main purpose of the TPUs is to accelerate machine learning workloads. It cannot be used for multiple applications or to process a heavy 3D game. The TPUs are optimized for high-throughput, with low-power consumption, and low-latency operations required for training neural networks (Machine Learning).

Basically, TPU serves to train machine learning models, cheaper and more efficient than other solutions and still provides plenty of computing power.

Currently, TPUs are an integral part of Google’s Cloud infrastructure, and they power different AI applications like image recognition, chatbots, natural language processing, synthetic speech, recommendation engines, and more.

Google uses them in Google Photos, Google Translate, Google Assistant, Google Search, and even Gmail.

TPUs work differently from CPUs and GPUs. They load the parameters from memory and put them into the matrix which they use for multiplication, and after that, they load data from memory. Then, when multiplication is happening, the results are passed from one multiplier to the next, while simultaneously taking summation. No memory access is necessary during the process even though the calculation and data passing could be massive.

Google started using TPUs for internal purposes back in 2015, but it took a long time before they became available to the public. In 2016, at Google I/O, the company’s development conference, Google announced showing the TPUs to the world. The tech giant explained what they are, showed the TensorFlow framework, and talked more about the Tensor Cores. What Google bragged about was that these new processing units would enhance the performance of Machine Learning models. The TPU v1 was mostly designed for inferencing tasks and delivering significant improvement in power efficiency over CPUs and GPUs at the time. TPU v2 and v3 expanded their use by including both training and inference, offering higher performance and greater scalability. TPU v4 and TPU 5 (TPUv5e and TPUv5p) further improved the processors and their capabilities.

TPU versions:

The secret to TPU productivity is the Tensor Cores. They are the small units, inside each TPU, that handle the specific mathematical operations, involved in training and running Machine Learning models. In contrast to cores inside CPUs and GPUs, the Tensor Cores perform matrix multiplication additions at high speed, which are fundamental operations in deep learning algorithms. By parallelizing these tasks and optimizing them for neural network workloads, Tensor Cores significantly accelerate the computation process, allowing shorter training times and more efficient inference.

TensorFlow is a Machine Learning framework created by Google. It is an open-source and it offers a comprehensive ecosystem for building and deploying machine learning models, including tools for model training, data preprocessing, and serving. The idea behind it is to be flexible and scalable, so Google can offer it as a cloud service. TPUs are tightly integrated with TensorFlow, enabling developers to leverage the power of TPUs seamlessly within their TensorFlow workflows.

Use TPUs when you need to:

Use GPUs when you need to:

Use CPUs when you need to:

| Parameter | TPU (Tensor Processing Unit) | GPU (Graphics Processing Unit) | CPU (Central Processing Unit) |

| Primary Use | Machine learning and AI workloads | Graphics rendering, machine learning, and parallel processing | General-purpose computing and serial processing |

| Architecture | Custom ASIC (Application-Specific Integrated Circuit) | Many-core architecture | Few cores with high clock speed |

| Performance | Optimized for matrix multiplications and tensor operations | High parallelism for massive computation tasks | High single-thread performance |

| Energy Efficiency | High for specific ML tasks | Moderate, depends on the workload | Varies, generally lower for intensive computations |

| Programming Frameworks | TensorFlow, custom frameworks | CUDA, OpenCL, DirectCompute | General-purpose languages (C, C++, Python, etc.) |

| Latency | Low for ML tasks | Low for parallel tasks, higher for serial tasks | Low for serial tasks, higher for parallel tasks |

| Cost | High, especially for state-of-the-art versions | High, especially for high-end models | Varies, generally lower than GPUs and TPUs |

| Memory Bandwidth | High for tensor operations | High for graphics and parallel processing tasks | Moderate to high, depending on the model |

| Flexibility | Low, designed for specific tasks | Moderate, versatile but best for parallel tasks | High, versatile for a wide range of applications |

It depends on your specific needs. If you are thinking about a small Machine Learning project, you are better off with a simple CPU. If you are searching for a solution for large neural network training, check if you need TensorFlow or not. If you don’t, you can go for a GPU solution for training AI.

If your project is large, involves neural network training, or inference and you need high efficiency and high performance, then TPUs can be an excellent choice.

Just be sure to make a good evaluation of your workload and performance goals, before you jump to conclusions and make your choice.

… [Trackback]

[…] There you will find 70033 additional Information to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Info to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] There you can find 55345 additional Information on that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Read More Information here on that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Here you will find 37807 additional Info on that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] There you can find 43855 more Information on that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Info on that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Read More to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Here you can find 62355 more Info to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Read More Info here on that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Find More here to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Find More to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Read More Info here to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] There you will find 98145 more Information to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Read More here on that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] There you can find 37634 more Information on that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Here you will find 38094 more Information on that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Read More here to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Here you can find 30056 additional Information to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Read More Information here to that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]

… [Trackback]

[…] Find More here on that Topic: blog.neterra.cloud/en/so-what-is-a-tensor-processing-unit-tpu-and-why-will-it-be-the-future-of-machine-learning/ […]